“RAG Isn’t Dead” – Use Context Wisely

Meta’s Llama 4 Scout has recently made waves with its massive 10-million-token context window, promising revolutionary improvements for retrieval-augmented generation (RAG) and long-form reasoning tasks. But how does Scout truly perform when compared to other leading LLMs like Gemini 2.5 Pro and GPT-4o, especially as prompts push towards their context-length limits? In this analysis, we dive deep into Scout’s architecture, evaluate its practical strengths and weaknesses, explore its implications for developers, and examine the controversy surrounding allegations of benchmark training.

Architectural Innovations Enabling Long-Context Support

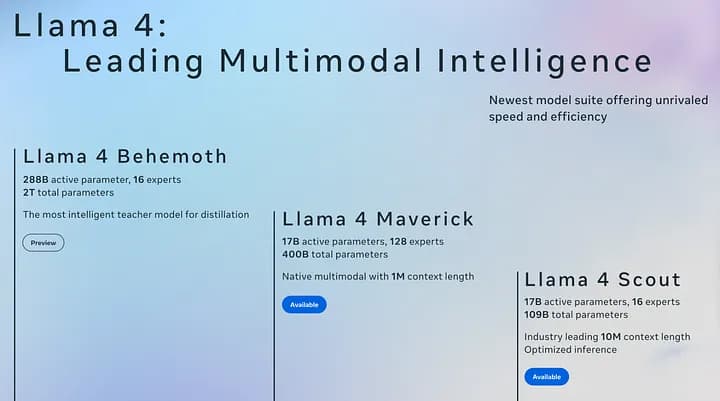

Mixture-of-Experts Architecture: Llama 4 Scout uses a Mixture-of-Experts (MoE) design with 16 expert subnetworks (109B total parameters, but only ~17B “active” per token) (1) . An MoE model has a router that directs each input token to a specialized expert, so only a fraction of the model’s weights are used for any given token . This yields a smaller active model (17B) handling each inference step, greatly improving efficiency without sacrificing overall capacity. For example, one expert might specialize in code and another in prose; the router sends a programming query to the coding expert, avoiding activating all 109B parameters unnecessarily. This architecture allows Llama 4 to maintain high quality while fitting on modest hardware (Scout runs on a single 80GB H100 GPU) (2). It also preserves speed – Scout’s first-token latency can be as low as ~0.39 s and throughput about 120 tokens/s in benchmarks (3) – despite the massive context window.

Interleaved Attention (iRoPE) Mechanism: To support an unprecedented 10 million token context window, Llama 4 Scout forgoes traditional positional embeddings in favor of Meta’s interleaved Rotary Positional Embedding (iRoPE) approach (4). The model interleaves “local” and “global” attention layers to handle long sequences. In local attention layers, the model attends to a limited window (e.g. 8K tokens) using Rotary Position Embeddings (RoPE) to capture relative positions. These local blocks are fully parallelizable and efficient, handling short-range dependencies. Interspersed are global attention layers that omit fixed positional encodings entirely, enabling them to attend beyond the 8K chunks and capture long-range context. By alternating local and global layers (hence “interleaved”), Llama 4 can theoretically handle extremely long sequences without running into position index limits. Notably, Llama 4 was only trained on sequences up to 256K tokens – far longer than previous generations but still far short of 10M . Beyond 256K, it must extrapolate its learned patterns, which is made feasible by the no-pos-embeddings strategy in global layers.

Dynamic Attention Scaling: One challenge at extreme context lengths is that attention scores can “flatten out,” making it hard for the model to focus on relevant parts of very long inputs. Llama 4 addresses this by scaling the query vectors as a function of position during inference. Once tokens exceed a threshold (e.g. after 8K tokens), the model gradually increases the magnitude of their query representation via a log-scale factor. In effect, tokens appearing later in the prompt are given a boost to ensure they still attract attention even when the context is millions of tokens long. This temperature-like scaling preserves short-range performance (within the first 8K) while enhancing long-range reasoning by preventing distant tokens from being completely overshadowed. Combined with MoE (which ensures enough model capacity to memorize and utilize long-term patterns), these attention innovations allow Llama 4 Scout to theoretically handle contexts “unbounded” in length – up to 10M tokens of text, equivalent to ~5 million words or ~32 MB of data in one prompt (5).

Multimodal Early-Fusion Backbone: In addition to long text, Llama 4 is natively multimodal. Both Scout and the larger Maverick use an early-fusion architecture where all model parameters jointly learn from text, images, audio, and video data (1). Unlike previous models that bolted on vision modules, Llama 4’s single network can ingest and reason over different modalities in the same context. For developers, this means you can provide an image or diagram alongside text in the prompt, and the model integrates them seamlessly. This is particularly useful for RAG use cases involving PDFs with figures or multimodal documents. The combination of MoE and early-fusion makes Llama 4 Scout a uniquely versatile foundation: it efficiently handles a huge context of mixed text and visuals in one model. (Notably, the full Scout model weighs ~200+ GB in memory, and serving its 10M-token context requires a very large key-value cache for attention. This has driven cloud providers to deploy Scout in managed serverless environments to hide the complexity of hosting such a model.

Performance on RAG Tasks vs. Gemini 2.5, GPT-4o, and o3-Mini

Llama 4 Scout’s RAG Behavior: Llama 4 Scout excels at ingesting large troves of retrieved data, but it has a tendency to always produce an answer, even if the retrieved context is insufficient. In a head-to-head RAG evaluation (using the RAGAS scoring framework), Scout answered every query – sometimes based on its own prior knowledge – whereas OpenAI’s GPT-4o would refuse to answer when context was missing (6). Specifically, when the retrieval step failed to find relevant documents (Context Precision/Recall = 0), Llama 4 nonetheless generated answers that “sounded relevant” to the questions, sticking to whatever context was given (even if it was unrelated). GPT-4o, by contrast, followed instructions strictly and replied “I don’t have the answer to the question.” if the provided docs didn’t contain it. In RAG terms, Llama 4 is more eager and generative, which can be useful for open-ended queries or when a plausible guess is better than no answer. However, this also means it may hallucinate – fabricating an answer that sounds valid – when the retrieval falls short. GPT-4o’s conservative approach yields higher precision (no answer is given unless supported by context), which is safer for high-stakes factual tasks Developers have observed that Llama 4’s answers are often fluent and on-topic but not always grounded in the retrieved evidence, whereas GPT-4o prioritizes factual grounding even if it means responding with a refusal. This difference suggests a trade-off: Scout may better handle “knowledge-gap” questions in a user-friendly way, but GPT-4o minimizes hallucinations by design.

Benchmark Quality and Reasoning: On standard QA and reasoning benchmarks relevant to RAG, Llama 4 Scout performs competitively, though it doesn’t always top the charts against larger proprietary models. Google’s Gemini 2.5 Pro currently leads many academic benchmarks – for example, scoring 84.0% on the GPQA science QA test, compared to ~71.4% by OpenAI’s GPT-4.5 (an updated GPT-4 model) (7). Meta did not claim that Scout surpasses Gemini in raw accuracy; in fact, Gemini 2.5 Pro outperforms Llama 4 Scout on several reasoning benchmarks (e.g. math, science). Llama 4’s strength lies in its extreme context length and efficiency rather than highest absolute accuracy. Meta’s own comparisons note that Gemini 2.5 Pro achieves higher raw scores in coding and reasoning, but is limited to a 1M token context – an order of magnitude smaller window Llama 4’s upcoming 400B-parameter sibling (Maverick) and especially the in-progress 2T-parameter Behemoth model are intended to close that quality gap while maintaining long context. Early results show Llama 4 Maverick (with many more experts) can outperform GPT-4o and Gemini 2.0 on certain benchmarks like multilingual understanding and image reasoning , but Scout (the 17B model) is tuned more for efficiency and accessibility. In summary, for RAG tasks that involve complex reasoning or code, Gemini 2.5 and GPT-4 class models still have an edge in accuracy. However, Scout is not far behind and often matches or beats older open models (Llama 3, Mistral, etc.) on knowledge and reasoning tests. Its open-source nature means it can be fine-tuned for domain-specific RAG use (e.g. on custom knowledge bases) which can mitigate some of the out-of-the-box gap.

Handling Maximum Context Length: A critical performance factor in RAG is how a model behaves as prompt size grows. Llama 4 Scout can technically ingest 10M tokens, but practical quality begins to degrade beyond the lengths it saw during training (~256K). As AI researcher Andriy Burkov noted, “The declared 10M context is virtual because no model was trained on prompts longer than 256k… if you send more than 256k tokens, you will get low-quality output most of the time.”. In other words, Llama 4’s long context capability is largely extrapolative – it wasn’t explicitly trained on multi-million-token examples, so it may struggle to maintain coherence if you truly push toward the 10M limit. By contrast, Google’s Gemini 2.5 Pro (1M context) appears to have been trained or architected to retain fidelity up to its full window. Anecdotal reports and internal tests indicate Gemini’s performance holds strong even at very long inputs: it scored 94.5% on MRCR, a multi-round coreference resolution challenge designed to test reference tracking over extremely long text sequences. One user observed that Gemini was “better [at 500K tokens] than other SOTAs are at 64K,” highlighting an impressive ability to utilize large contexts effectively (8). OpenAI’s GPT-4o, with a 128K token limit, lies between these extremes. It represents roughly a 4× increase over the original GPT-4’s 32K max. OpenAI hasn’t disclosed exactly how GPT-4o was trained for long context, but it likely involved some form of positional scaling or partial training on long sequences. Early adopters found that API and tooling limits sometimes prevented using the full 128K at launch (some encountered errors above 20K tokens due to system limits) (9) (10). When it works, GPT-4o can definitely handle documents on the order of tens of thousands of tokens, but at 6-figure context lengths it may not reliably leverage every detail – similar “lost in the middle” effects could arise if crucial information is buried very deep in the prompt. The smaller o3-mini variant shares the 128K context window but, being a lighter model, may have somewhat less capacity to memorize details across the full span (OpenAI hasn’t published how mini performs on, say, 100K-token prompts versus the full model). In general, all models see latency and memory use grow with context length, so hitting the max window can incur steep computational costs and some answer quality degradation. Llama 4 Scout’s clever engineering delays this degradation – for instance, its attention scaling trick helps it remain focused further out – but beyond a certain point it too will struggle to “see the forest for the trees.” Developers should treat the extreme limits (1M+ tokens) as emergency capacity or for experimentation, rather than something to use routinely in production without careful benchmarking of accuracy.

Latency and Throughput at Scale: With larger context comes increased latency. If you actually supply hundreds of thousands of tokens to these models, expect slower responses. Llama 4 Scout, by virtue of MoE and its 17B active size, is relatively fast per token – e.g. ~0.39s overhead + ~120 tokens/sec generation as noted – but that doesn’t account for the time to process the input prompt itself. Processing 100K tokens can add many seconds of compute even before the model starts generating output. GPT-4o, which is based on a much larger dense model (OpenAI doesn’t disclose its param count, but likely tens of billions), tends to be slower. In fact, OpenAI’s 128K context API often has noticeable delay; some of that is network overhead (since many use it via cloud API), and some is the model’s heavy lifting. Google’s Gemini 2.5, while extremely powerful, introduces an interesting twist: it uses “thinking” steps (Chain-of-Thought) internally (12) . This can improve accuracy but also means additional tokens generated and processed per query (they even count as output tokens for billing) (13). Thus Gemini might be slower or costlier for a given prompt length than a model that doesn’t do multi-step reasoning internally. Meanwhile, GPT-4o Mini is tuned for speed – OpenAI claims it’s ~60% cheaper and faster than GPT-3.5 Turbo while actually being smarter. It achieves this by having a reduced parameter count (exact size not public) and perhaps lower precision. This makes o3-mini attractive for high-volume RAG scenarios (like responding to thousands of support tickets) where the absolute highest accuracy is not required. It still benefits from the 128K context, but one might choose mini specifically to avoid the heavy latency of the full GPT-4o. In summary, Llama 4 Scout offers a sweet spot for latency vs. context – its architecture keeps serving costs low even for long prompts, whereas GPT-4o and Gemini 2.5, while highly capable, may demand more compute per token. The difference can be stark in cost terms: one analysis pegged GPT-4.5’s usage cost at nearly 350× Llama 4 Scout’s for a 1M-token workload. Open models like Llama are dramatically cheaper to run if you have the hardware, which is a key consideration for scaling RAG systems economically.

Developer Implications for RAG and Long-Form Reasoning

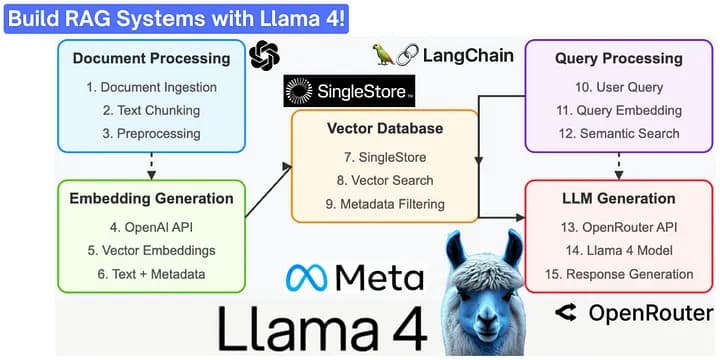

“RAG Isn’t Dead” – Use Context Wisely: Meta’s Llama 4 Scout may tempt developers to stuff the entire internet (or an enterprise’s entire knowledge base) into a single prompt. In practice, this is neither efficient nor reliable. Retrieval-Augmented Generation is still the recommended design pattern for large knowledge tasks. A massive context window helps — it means your RAG pipeline can include more passages at once — but you should retrieve only the most relevant chunks for each query, not blindly dump everything. Feeding too much noise can detract from model performance, akin to a human trying to read a library in one go. The Lost-in-the-Middle effect is real: models given a flood of data may lose track of what’s important. So, developers should continue to use vector search or other retrieval methods to filter documents, even if Scout could technically read them all. An expansive context window then lets you include contextual breadth: for example, you might include multiple documents (or long documents) in one prompt for the model to synthesize. Llama 4 Scout is particularly well-suited to summarizing or cross-analyzing many sources at once (since 100K+ tokens of combined text is feasible) – but those sources still need to be selected carefully.

Streaming and Memory Management: Because Scout can handle streaming inputs, developers can adopt a sliding window or chunk-processing approach for truly giant inputs. Instead of hitting the 10M limit outright, you might feed, say, 100K tokens at a time (e.g. chapters of a book or segments of a long meeting transcript) and allow the model to carry weights of earlier segments in its hidden state or via intermediate summaries. The interleaved attention mechanism essentially supports this by design, treating long input as chunks with global layers stitching them together. However, keep in mind the GPU memory requirements – a 10M-token context will consume a huge key-value cache. If self-hosting, you’d need multiple high-memory GPUs or distributed infrastructure. Using a hosted solution (like Cloudflare Workers AI or Azure AI) offloads this complexity. At launch, many platforms limit Llama 4’s context to around 131K tokens anyway (14), both for technical reasons and because there isn’t yet a strong demand to push beyond that in real apps. It’s wise to start with those lower limits and gradually increase context size as needed, monitoring latency and output quality.

Combining Modalities in RAG: One practical win for Llama 4 in RAG applications is its ability to accept images and audio in the prompt. Developers can build multimodal RAG systems – e.g. retrieving an instruction manual diagram and text, or an audio transcript and related documents, and feeding both to the model. Scout will understand combined contexts like “an image of a device + its spec sheet text” without needing separate vision models. Competing models also offer multimodality (GPT-4o can handle image and audio inputs, and Gemini 2.5 is multimodal as well , but Llama 4 provides this in an open-source package that you can fine-tune. For domain-specific use, one could fine-tune Scout on, say, PDF documents (text + layout images) to better handle visually structured data in retrieval. This flexibility is a boon for building robust RAG pipelines that involve more than just plain text.

Guardrails and Prompting Strategies: Because Llama 4 Scout will eagerly answer even when retrieval fails, developers should implement guardrails to reduce hallucinations. One approach is to add a system prompt that explicitly says “If you are not sure, say you don’t know.” However, as the RAGAS test showed, Scout tends to prioritize answering the user over strict compliance. Another approach is to post-process the answer: e.g. use a fact-checking step or cross-verify if the answer uses info from the provided context. Some developers pair Scout with a smaller verification model or with heuristic checks on whether the answer contains out-of-context info. In contrast, if using GPT-4o, you might need to prompt it to be more creative since it defaults to conservative answers. Understanding these behavioral differences lets you tailor the system prompt for each model – e.g., loosen GPT-4o’s constraints a bit in creative tasks, tighten Llama 4’s instructions in factual tasks.

Cost and Deployment Considerations: The open-source advantage of Llama 4 Scout can translate to major cost savings in production RAG systems. With OpenAI and Google models, you pay per token (and as noted, long contexts can become very expensive). Scout allows you to run inference on your own servers or through cost-competitive providers. IBM, Cloudflare, and others have already integrated Llama 4, often touting significantly lower cost per query. For startups building RAG apps on large document corpora, using Scout can mean the difference between a viable business model and one eaten alive by API fees. That said, hosting Scout isn’t trivial – you need the right hardware or cloud setup to achieve the throughput numbers Meta cites. Solutions like DeepSpeed, vLLM, or quantization can help reduce memory footprint and improve inference speed. Also consider hybrid approaches: one could use o3-mini or GPT-3.5 for simple queries and fall back to Llama 4 only for the heavy long-context queries, balancing cost and quality. The unit economics strongly favor having Llama 4 in the mix for long-form queries, given reports of >100× cost differences for large contexts.

Security and Privacy: An often overlooked aspect is data governance. Simply increasing the context window doesn’t eliminate the need for careful data handling. Enterprises using RAG with Llama 4 should note that pouring raw confidential data into a prompt has risks. As the F5 team pointed out, continuously feeding a model lots of data makes it harder to control access and can lead to inadvertent leaks through the model’s outputs. RAG, by retrieving snippets on demand, helps enforce access controls – you only retrieve data the user is allowed to see, and only that goes into the prompt. Even though Llama 4 is self-hostable (avoiding external API exposure), one must still ensure that prompt logs, memory, etc., are handled securely. Additionally, regional and regulatory compliance often demand that a model’s inputs be limited to what is necessary for a given task. A 10M-token prompt that indiscriminately includes personal data could violate policies. So developers should design RAG pipelines that remain data-efficient and compliant, using the long context to enhance capability, not to hoard sensitive info. In short, long context is a tool, not a replacement for retrieval: it should be used to augment RAG (e.g. allow more elaborate intermediate reasoning or to include broader context when needed) rather than to supplant the fundamental architecture of retrieve-then-read.

Controversy: Training on Benchmarks and Ethical Considerations

Shortly after Llama 4’s release, a controversy arose over whether Meta “gamed” popular benchmarks to inflate the model’s reported performance. The allegations originated from social media and purported insider comments, claiming that Meta’s leadership pushed the team to include test questions from evaluation benchmarks in the training or fine-tuning data (14). In effect, Meta was accused of training on the very exams (like MMLU, HumanEval, LM Arena reference questions, etc.) that Llama 4 would later be measured against – a form of cheating that could make the model appear better than it truly is. One unverified post went so far as to say a researcher resigned in protest after being asked to “blend test sets from various benchmarks” into the post-training process. This sparked an outcry in the AI community; many argued that if true, it amounts to fraudulent evaluation and betrays the trust of the open-source community . Some pointed out that Llama 4’s surprisingly high scores on certain leaderboards – and its poor performance on new, independent tests not seen before – suggested something was amiss. For example, one discussion noted “It does very poorly on independent benchmarks like Aider” but excelled on benchmarks it knew. This discrepancy fueled the skepticism that Meta might have overfit the model to known metrics.

The ethical debate was intense: AI researchers universally condemn training on test data because it undermines scientific evaluation. If a model gets top scores by memorizing the test, it hasn’t truly advanced the state of the art – it’s just regurgitating answers. Moreover, it could mislead users and developers about the model’s capabilities. In Meta’s case, community members voiced disappointment, given Meta’s otherwise positive stance on open science. Some commentators didn’t mince words, calling it “benchmark fraud” and criticizing the pressure to meet CEO Mark Zuckerberg’s ambitious goals at any cost. The fact that Joelle Pineau, Meta’s AI research lead, reportedly left the company around this time (the rumor mill even used the word “fired”) added fuel – people speculated whether her departure was related to disagreements over such practices. However, it’s important to note that a lot of these claims remain unconfirmed.

Meta’s Response: Officially, Meta denied that it intentionally trained Llama 4 on evaluation benchmarks. Yann LeCun (Meta’s Chief AI Scientist) addressed the issue in a post on X (Twitter), stating that Meta “would never do such a thing” in response to the accusation of training on benchmarks ( 15 ). He explained that any overlap with benchmark data was incidental or part of general pre-training, not a targeted effort to cheat. LeCun and others noted that when you train on a large internet text corpus, some benchmark questions might appear in the training data simply because those questions and answers exist out there in forums or academic papers. In other words, unintentional leakage of test data is a known issue in the field and not unique to Meta. LeCun has himself criticized the heavy focus on benchmarks, noting that many model “improvements” could just be models learning the test rather than truly reasoning. In the case of Llama 4, Meta’s position is that its performance gains are genuine – bolstered by techniques like co-distillation from the larger Behemoth model and better training algorithms (Meta mentions methods like “MetaP” and advanced RLHF in their report) (4) – and not simply a result of exam leakage.

Meta did acknowledge one form of “cheating,” but it was in the context of public leaderboards rather than training data. They revealed that the LMArena Elo scores cited in their blog came from a chat-optimized variant of Llama 4 that was deployed experimentally. Essentially, Meta had an internal version of Llama 4 (optimized for conversation) compete in the online Arena and it scored very high, allowing them to claim top rankings. When this was discovered, Meta explained it as “we were experimenting with different versions, and the one on Arena was a tuned model” . While not as severe as training on test answers, this raised some eyebrows in the community – it’s a reminder that even open model providers can cherry-pick settings to put their best foot forward. Meta’s open release of Llama 4 (the weights on HuggingFace) is exactly what it is, and the community can and will verify its true performance over time with completely held-out evaluations. Indeed, the controversy has already led to calls for more robust, novel benchmarks and transparency. For example, researchers propose testing models on surprise tasks or using tools like EvalHarness that dynamically generate questions to prevent any possibility of training contamination.

In summary, the benchmark controversy around Llama 4 Scout underscores the importance of ethics and transparency in AI development. Meta’s Llama 4 is an impressive step forward – massive context, multimodal, open-source – but the episode has somewhat marred its reception. The community discourse serves as a healthy checkpoint: reminding all AI labs (Meta included) that credibility is earned by playing fair on evaluations. As of April 2025, no definitive proof has shown Meta intentionally cheated, and Meta’s leaders have explicitly denied it ( 15 ). Still, the discussions continue to prompt valuable reflection. For developers and researchers using Llama 4, the takeaway is to trust but verify: validate the model on your own tasks (don’t rely solely on Meta’s reported benchmark numbers), and keep advocating for open, honest benchmarks in the AI ecosystem. The incident also highlights how an open release enables the community to test and discover such issues quickly – a silver lining that ultimately will lead to more robust models and evaluation practices for everyone.

Conclusion

Meta’s Llama 4 Scout represents a significant leap forward in long-context handling capabilities, offering developers a powerful new tool for retrieval-augmented generation and multimodal applications. Its innovative architecture and efficiency make it highly competitive against leading models like Gemini 2.5 Pro and GPT-4o, especially when context size and deployment costs are critical considerations. Despite Scout’s expansive context window, retrieval-augmented generation remains highly relevant today, as it effectively complements Scout’s attention mechanism by selectively retrieving precise and contextually relevant information from extensive datasets it hasn’t explicitly trained on. However, practical limitations around its true context handling capabilities and the recent controversy over benchmark training practices highlight the ongoing need for transparency, careful evaluation, and responsible implementation. Ultimately, while Scout promises substantial potential, developers must remain mindful of its nuances to fully harness its strengths in real-world applications.

Sources

Meta’s Llama 4 is now available on Workers AI https://blog.cloudflare.com/meta-llama-4-is-now-available-on-workers-ai/

Llama 4 Comparison with Claude 3.7 Sonnet, GPT-4.5, and Gemini 2.5 - Bind AI https://blog.getbind.co/2025/04/06/llama-4-comparison-with-claude-3-7-sonnet-gpt-4-5-and-gemini-2-5/

Llama 4 Scout - Intelligence, Performance & Price Analysis https://artificialanalysis.ai/models/llama-4-scout

Meta released Llama 4: A Huge 10mn Context Window, Runs on a Single H100 & Natively Multimodal https://www.rohan-paul.com/p/meta-released-llama-4-a-huge-10mn

RAG in the Era of LLMs with 10 Million Token Context Windows | F5 https://www.f5.com/company/blog/rag-in-the-era-of-llms-with-10-million-token-context-windows.html

LLaMA 4 vs. GPT-4o: Which Is Better for RAGs? https://www.analyticsvidhya.com/blog/2025/04/llama-4-vs-gpt-4o-rag

Gemini 2.5 pushes reasoning forward https://www.contentgrip.com/google-releases-gemini-2-5

Is Gemini 2.5 with a 1M token limit just insane? : r/ClaudeAI https://www.reddit.com/r/ClaudeAI/comments/1jlu8ii/is_gemini_25_with_a_1m_token_limit_just_insane

GPT-4o Context Window is 128K but Getting error model’s maximum… (OpenAI Community) https://community.openai.com/t/gpt-4o-context-window-is-128k-but-getting-error-models-maximum-context-length-is-8192-tokens-however-you-requested-21026-tokens/802809

What is a context window—and why does it matter? - Zapier https://zapier.com/blog/context-window

GPT-4o explained: Everything you need to know (TechTarget) https://www.techtarget.com/whatis/feature/GPT-4o-explained-Everything-you-need-to-know

Gemini 2.5: Our newest Gemini model with thinking (Google/DeepMind) https://blog.google/technology/google-deepmind/gemini-model-thinking-updates-march-2025

Gemini 2.5 Pro is Google’s most expensive AI model yet - TechCrunch https://techcrunch.com/2025/04/04/gemini-2-5-pro-is-googles-most-expensive-ai-model-yet

[AINews] Llama 4’s Controversial Weekend Release • Buttondown https://buttondown.com/ainews/archive/ainews-llama-4s-controversial-weekend-release

Meta cheats on Llama 4 benchmark | heise online https://www.heise.de/en/news/Meta-cheats-on-Llama-4-benchmark-10344087.html

Want a solution like this?

Enter your email here and we'll get in touch with you.